|

In the first of three blog posts on ADFv2 parameter passing, Azure Data Factory (ADFv2) Parameter Passing: Date Filtering (blog post 1 of 3), we pretty much set the ground work. Now that I hope y'll understand how ADFv2 works, let's get rid of some of the hard-coding and make two datasets and one pipeline work for all tables from a single source. You know I have to say it ... can SSIS "show me [this] money"? This solution consists of three ADFv2 objects (yes, only three!!)

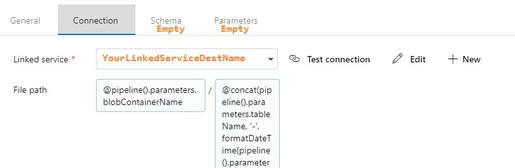

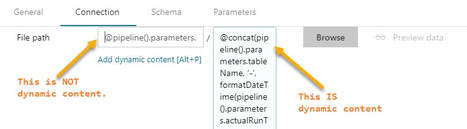

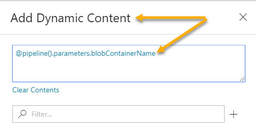

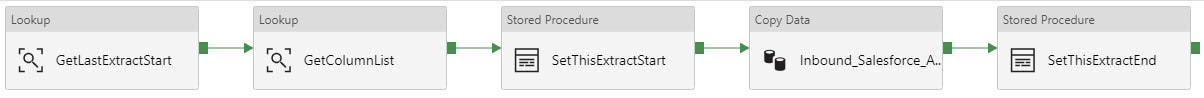

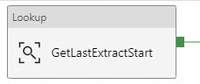

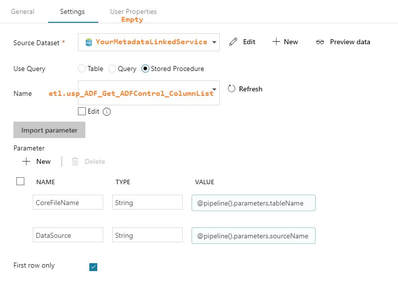

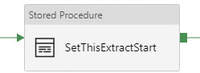

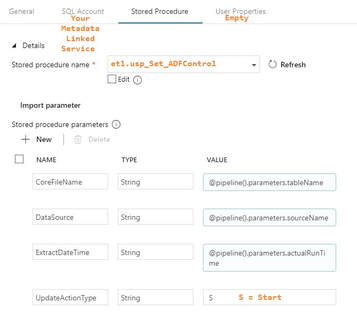

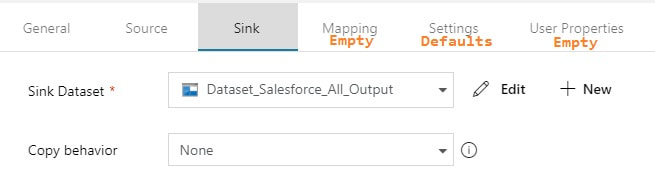

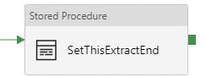

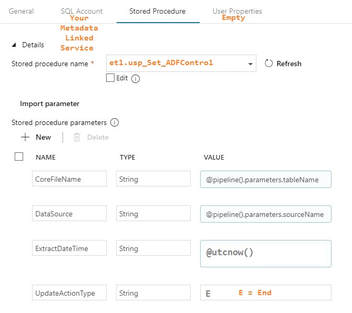

Assumption: You have read blog post 1 of 3 on parameter passing with ADFv2 and now understand the basic concept of parameter passing and concatenating values in ADFv2. Summary: This blog post builds on the first blog and will give you additional examples of how to pass a table name as dynamic content. It will show you how to use a 1.) single input dataset, 2.) single output dataset and 3.) single pipeline to extract data from all tables in a source. Setup: The JSON for all datasets and pipelines is attached below, but if you take a moment to understand the screen prints, it might be easier than reading JSON. 1. Single Input Dataset 2. Single Output Dataset File path prefix --> @pipeline().parameters.blobContainerName File path suffix --> @concat(pipeline().parameters.tableName, '-', formatDateTime(pipeline().parameters.actualRunTime, 'yyyy'), formatDateTime(pipeline().parameters.actualRunTime,'MM'), formatDateTime(pipeline().parameters.actualRunTime,'dd'),' ' , formatDateTime(pipeline().parameters.actualRunTime,'hh'), formatDateTime(pipeline().parameters.actualRunTime, 'mm'), '.txt') Tip: Dynamic content and text values that contain an "@pipline..." syntax are not the same thing. 3. Single ADFv2 Pipeline The primary add-in for this blog post is the lookup for a column list and the additional parameter being used for the table name. The @pipeline().parameters.actualRunTime value is passed by an Azure Logic App not explained here, or you could use the pipeline start or a utcdate. In blog post 3 of 3 we are going to put in a ForEach loop that will allow us to spin through a list of tables. Lookup Activity - GetLastExtractStart Lookup Activity -GetcolumnList Execute Stored Procedure - SetThisExtractStart Copy Activity This is where it all comes together. You concatenate your looked up column list with your looked up table name, and now you are using a single input dataset for all source tables.  @concat('SELECT ', activity('GetColumnList').output.firstRow.ColumnList, ' FROM ', pipeline().parameters.tableName, ' WHERE SystemModstamp >= ', formatDateTime(activity('GetLastExtractStart').output.firstRow.NextExtractStartDateTime,'yyyy-MM-ddTHH:mm:ssZ')) Execute Stored Procedure - SetThisExtractEnd The Conclusion of the Matter: I've taken the time to provide all of these screen prints in loving memory of SSIS. Once you transition, you can figure most of this out with a bit of persistence, but isn't it nice to have screen prints to show you the way sometimes? The most challenging part of this is the @concat() of the source copy activity. Every data source will require this in their own syntax (SOSQL, t-sql etc.), or beware -- in the syntax of the ODBC driver that is sitting behind Microsoft's data connector. Link to Azure Data Factory (ADF) v2 Parameter Passing: Date Filtering (blog post 1 of 3) Link to Azure Data Factory (ADF) v2 Parameters Passing: Linked Services (blog post 3 of 3) Supporting Downloadable Files:

6 Comments

Noel Ramirez

4/10/2019 08:50:43 am

Did you publish the third part of this article? Thanks for the article.

Reply

5/19/2019 03:54:04 pm

Here you go, Noel. https://www.delorabradish.com/azure/azure-data-factory-adf-v2-parameters-passing-linked-services-blog-post-3-of-3

Reply

Ewin Kiser

5/24/2019 09:58:04 am

Amazing Blog!! This and Part 3 is essentially what I have to do and I have been lost until I read your Blog!!! Thanks! Keep publishing these Blogs of Complex ADF mechanisms. There is really no where else that explains this like Part 2 and Part 3!

Reply

Sohan

9/26/2020 06:54:07 pm

I have gone through the article but it would be great if you could explain in detail what you are trying to do in the Blog 1, 2, and 3. There is no detailed explanation what exactly is happening in the Blog 2.

Reply

5/18/2022 08:37:23 am

Hello Delora Bradish, Awesome Article! very interesting and informative content of Azure Data Factory Blog. I hope you will share some more content about it. Please keep sharing and Thanks for the Great Article!!

Reply

Leave a Reply. |

|||||||||||||||

| Microsoft Data & AI | All Things Azure |

RSS Feed

RSS Feed