|

I just had the pleasure of deleting in my blog backlog, three ADFv1 blog posts in the making. The arrival of Azure Data Factory v2 (ADFv2) makes me want to stand up and sing Handel's Hallelujah Chorus. Yes, my fine friend, ADFv2 is a real game player now. Let us begin! Assumptions:

Summary: This blog post will give you examples of the following ADFv2 functionality

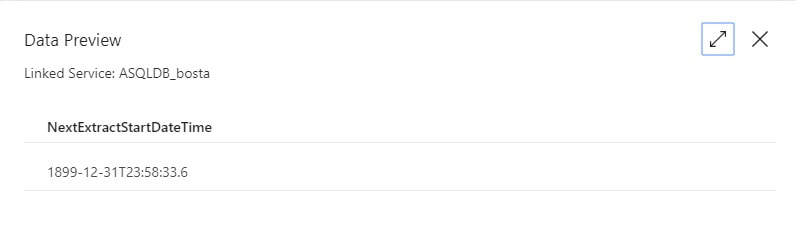

Setup To get "this extract start time" we obviously have to incorporate a metadata layer in our solution. ADFv2 can now Lookup to Cosmos DB as well as several other data sources listed here, but I'm an old fashioned SQL Server lady and I prefer to use an Azure Database for things like this. It doesn't hurt to keep tables and procs, like what is included in the sample t-sql file at the end of this post, in an Azure Data Warehouse, but honestly, replicated 60 times? If you can afford it, stand up a simple Azure SQL Database for metadata collection. Step #1: Create your ADFv2 control objects in your metadata SQL database

Step #2: Create your three ADFv2 linked services (data connections)

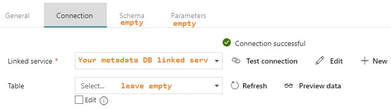

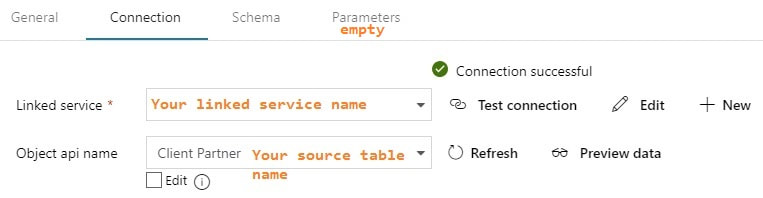

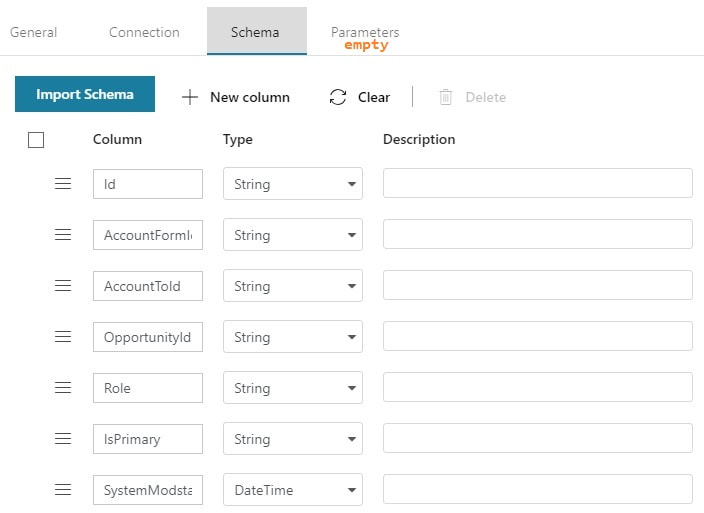

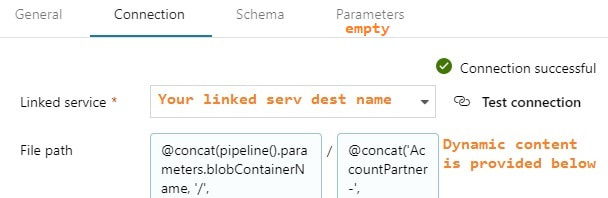

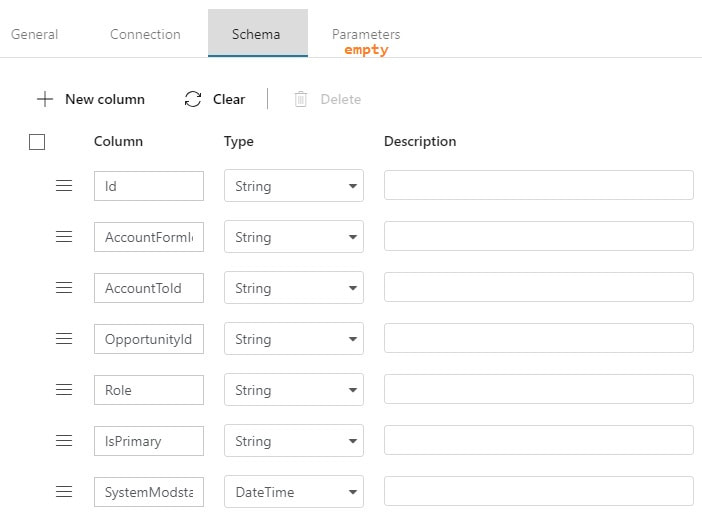

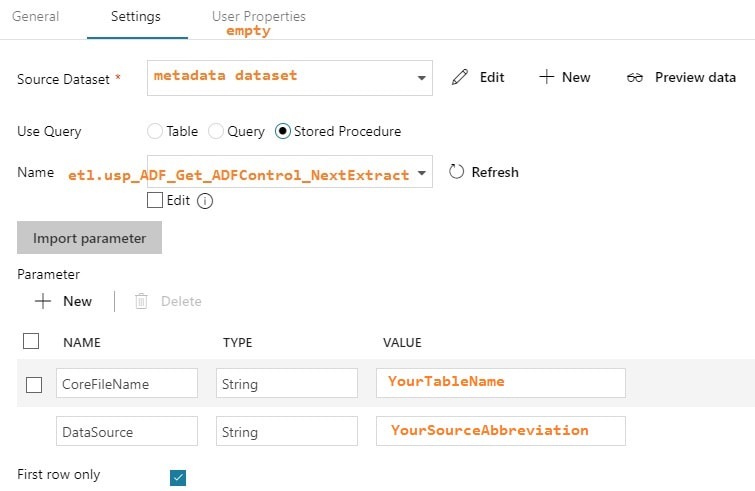

Step #3: Create your ADFv2 datasets I am going to show you screen prints here, but the JSON for these datasets is provided at the end of this blog post. This blog post uses a hard-coded table name. In blog post 2 of 3, I'll show you how to use a single input and output dataset to extract all tables from a single source. metadata DB used for all objects Your lookup and execute SQL tasks will use this same dataset. input dataset for your source This is your source dataset. You actually do not need to define every column, but we'll tackle that later. output dataset for your destination This is your destination dataset definition. You actually do not need to define every column here either, but more on that later.

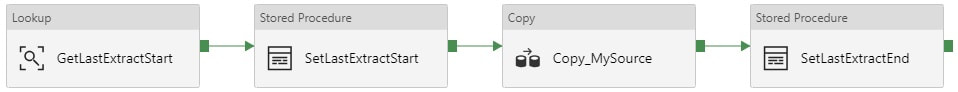

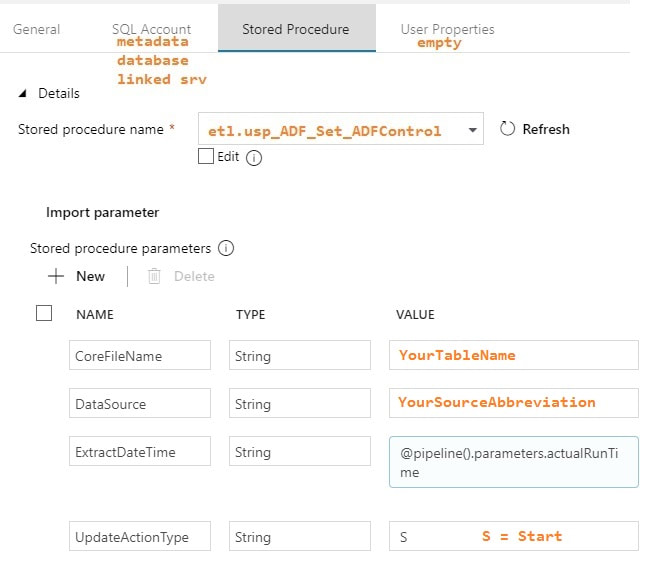

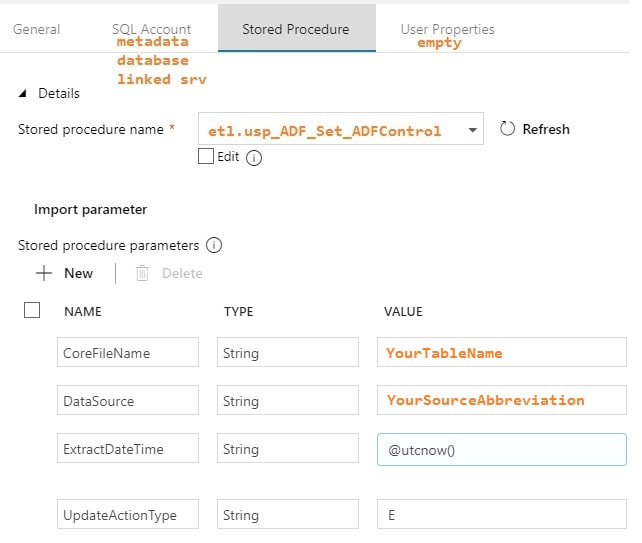

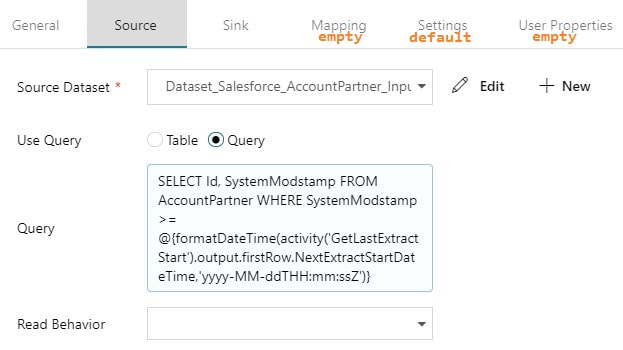

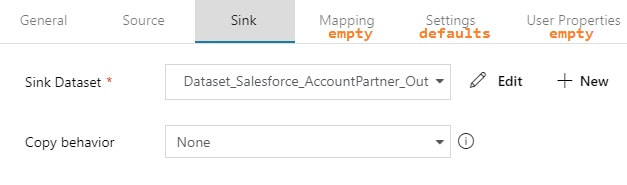

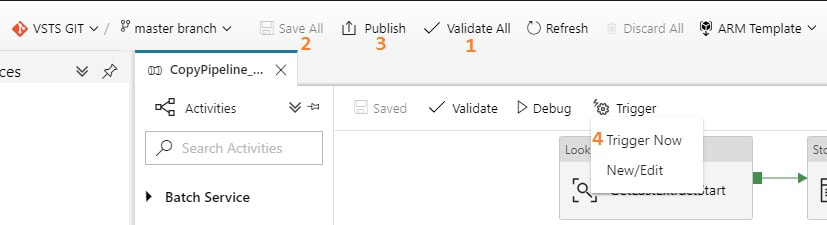

You may be thinking, "This is a lot of stuff to just import one table!" but stay tuned. I promise you, you will have less development time, cost of ownership, and less downtime by moving data with ADFv2 then you would with SSIS packages especially if you have > 2GB of data in a source object. Using parameters is the key to success. Step #4: Create your ADFv2 pipeline Lookup Activity: GetLastExtractStart Stored Procedure Activities: Set Start and End Dates Copy Activity: The actual copy data from source to destination filtered by YourSource.LastModifiedDate If you are new to ADFv2, here are a couple of screen prints on how to validate, save, publish and run your pipeline on demand. Conclusion of the Matter This blog post is intended for developers just starting out in ADFv2, and especially for ADFv2 developers pulling Salesforce data. I do not expect you to have needed all the information provided, but I am hoping you'll find a tip or trick that will save you time. Referencing activities and pipeline parameters is easy to do, for example, but when I first worked on this, I found very few examples. Finding the right syntax for a Salesforce date filter took me about a week. You may be frustrated transitioning from SSIS to ADFv2, but don't give up. Remember that ADFv2 is not a data transformation tool -- it is a data copy tool. SSIS <> ADFv2, and in a world where we are moving to MPP architectures and ELT via stored procedures, ADFv2 as an "orchestrator" is worth considering. Supporting Downloadable Files

9 Comments

Mike Kiser

5/28/2019 07:45:26 am

Awesome Awesome Article. It made me feel better then you said that it took you a week to get the syntax correct because I am experiencing the same. Your article is the first that I have found that covers what I need to do. I have been working on a very similar project for 3 weeks total with very little to show. Your article explains everything VERY clearly and like you say in the article there is very little out there on this topic. Thanks for all 3 parts!!

Reply

Saiprasad

8/30/2019 05:07:35 am

I really loved your constructive details, I have one similar scenario where I have just started working on creating pipeline, but strucked in one of the scenario where I have to do stream catch-up when ever the stream run date is less than current date, if not exit...If there is any such scenario, kindly help me in creating the pipeling..if you need any further details, kindly tell me

Reply

11/11/2019 08:50:48 pm

Saiprasad, I am unable to provide custom solutions, but you can reach out to Pragmatic Works for a consulting contract. Kindly, Delora

Reply

Nandini

12/4/2019 10:01:40 pm

Very well explained!!! It helped me a lot to build the framework using ADFv2. Thanks a lot for all the 3 posts.

Reply

Rizal Ang

1/8/2020 02:39:42 am

Excellent post Delora! learn a ton from you thank you so much.

Reply

Rizal, no extra formatting is needed. Just copy and paste the JSON provided; however, I did find it much easier in the end to setup a SQL metadata table which passed a correctly formatted WHERE clause. It was also much easier to use a SQL view to use logic to pass 'full load' or 'incremental load' parameter values. Anytime I find myself in a big brew-ha-ha in ADF expressions, I move it out to a SQL table with a Lookup() activity to pull what I need into ADF. Kindly, Delora

Reply

6/1/2022 07:58:49 am

Greetings of the Day delorabradish! The great Azure Data Factory blog and information shared are also very appreciable. You deserve a huge thanks for this wonderful post. This is really an excellent post. thanks!!

Reply

Leave a Reply. |

|||||||||||||||||

| Microsoft Data & AI | All Things Azure |

RSS Feed

RSS Feed