|

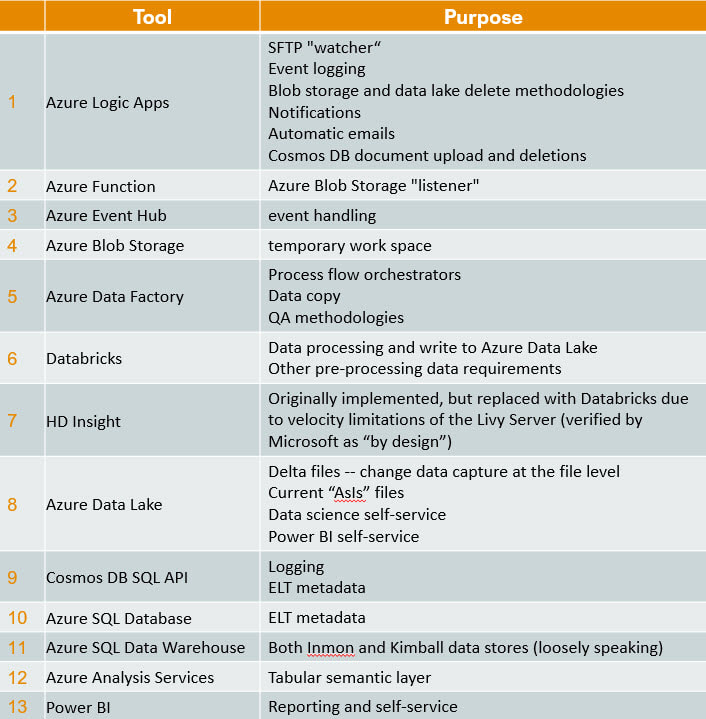

This is my second attempt to articulate lessons learned from a recent global Azure implementation for data and analytics. My first blog post became project management centered. You can find project management tips here. While writing it, it became apparent to me that the tool stack isn't the most important thing. I'm pretty hard-core Microsoft, but in the end, success was determined by how well we coached and trained the team -- not what field we played on. Turning my attention in this blog post to technical success points, please allow me to start off by saying that with over twenty countries dropping data into a shared Azure Data Lake, my use of "global" (above) is no exaggeration. I am truly not making this all up by compiling theories from Microsoft Docs. Second, the most frequent question people ask me is "what tools did you use?", because migrating from on-prem, Microsoft SSIS or Informatica to the cloud can feel like jumping off the high dive at the community pool for the first time. Consequently, I'm going to provide the tool stack list right out of the gate. You can find a supporting diagram for this data architecture here. Microsoft Azure Tool Stack for Data & Analytics Hold on, your eyes have already skipped to the list, but before you make an "every man for himself" move and bolt out of the Microsoft community pool, please read my migration blog post. There are options! For example, I just stood up an Azure for Data & Analytics solution that has no logic apps, Azure function, event hub, blob storage, databricks, HDI, or data lake. The solution is not event-driven and takes an ELT (extract, load, and then transform) approach. It reads from sources via Azure Data Factory and writes to an Azure Database logging the ELT activities in an Azure Database as well. Now, how simple is that? Kindly, you don't have to build the Taj MaSolution to be successful. You do have to fully understand your customer's reporting and analysis requirements, and who will be maintaining the solution on a long-term basis. If you still wish to swan dive into the pool, here's the list! Disclaimer: This blog post will not address Analysis Services or Power BI as these are about data delivery and my focus today is data ingestion. Technical Critical Success Points (CSP) Every single line item above has CSPs. How long do you want to hang out with me reading? I'm with you! Consequently, here are my top three CSP areas. Azure Data Factory (ADF)

Azure Data Lake (ADL)

Data Driven Ingestion Methodology

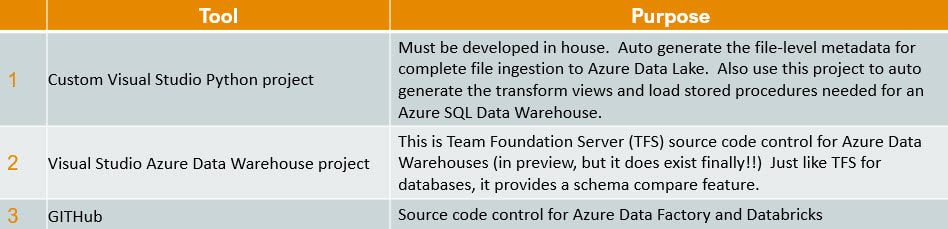

Wrapping It Up Supporting Applications It is only honest to share three supporting applications that help to make all of this possible. Link to creating a Python project in Visual Studio. Link to Azure SQL Data Warehouse Data Tools (Schema Compare) preview information. At the writing of this post, it still has to be requested from Microsoft and direct feedback to Microsoft is expected. Link to integrate Azure Data Factory with GitHub. Link to integrate Databricks with GitHub. Sample Files Below are example of data driven metadata and environment properties. Both are in JSON format.

A Bit of Humor Before You Go

Just for fun, here are some of my favorite sayings in no certain order

1 Comment

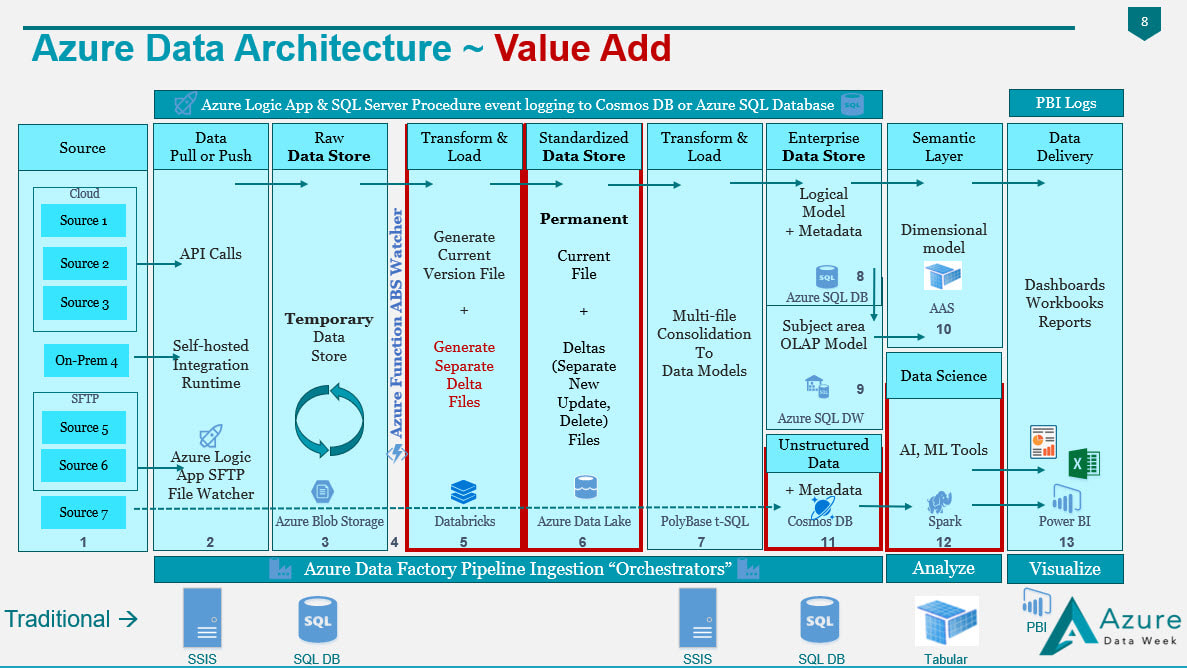

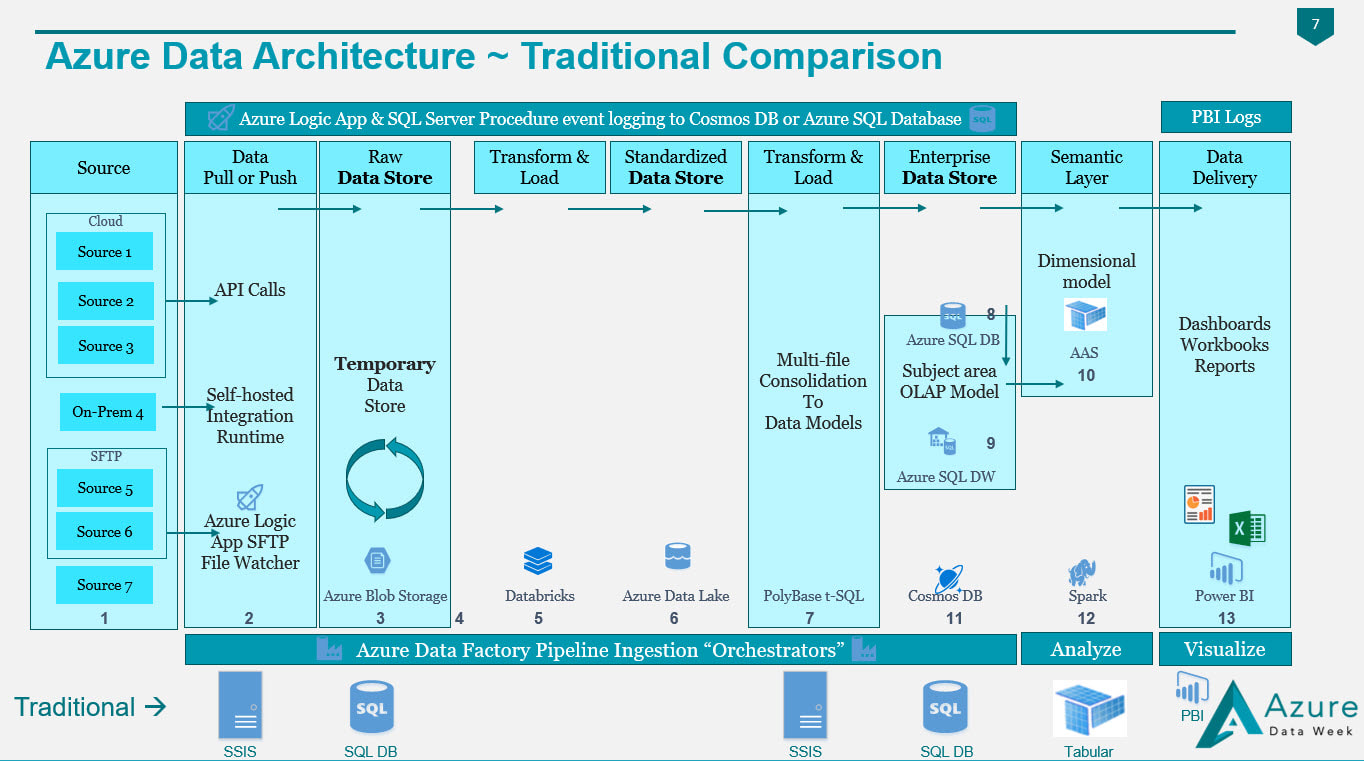

Confession: I put a lot of subtexts in this blog post in an attempt to catch how people may be describing their move from SSIS to ADF, from SQL DBs, to SQL DWs or from scheduled to event-based data ingestion. The purpose of this post is to give you a visual picture of how our well loved "traditional" tools of on-prem SQL Databases, SSIS, SSAS and SSRS are being replaced by the Azure tool stack. If you are moving form "Traditional Microsoft" to "Azure Microsoft" and need a road map, this post is for you. Summary of the Matter: If you only read one thing, please read this: transitioning to Azure is absolutely "doable", but do not let anyone sell you "lift and shift". Azure data architecture is a new way of thinking. Decide to think differently. First Determine Added Value: Below are snippets from a slide deck I shared during Pragmatic Work's 2018 Azure Data Week. (You can still sign up for the minimal cost of $29 and watch all 40 recorded sessions, just click here.) However, before we begin, let's have a little chat. Why in the world would anyone take on an Azure migration if their on-prem SQL database(s) and SSIS packages are humming along with optimum efficiency? The first five reasons given below are my personal favorites.

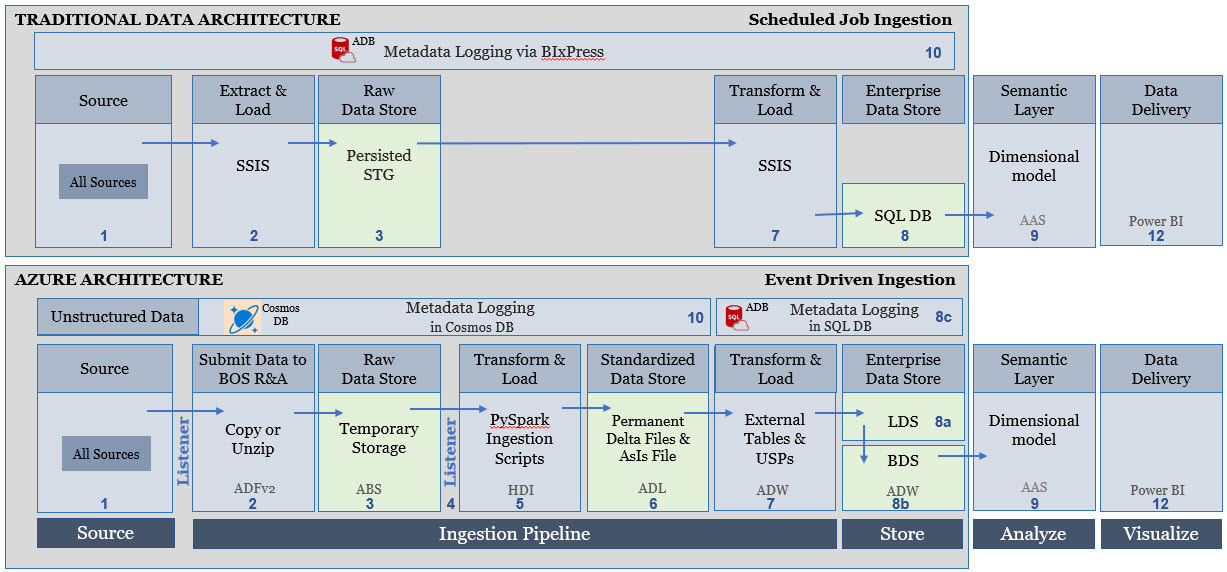

Figure 1 - Value Added by an Azure Data Architecture If you compare my Traditional Data Architecture diagram first posted on this blob site in 2015 and the Azure Data Architecture diagram posted in 2018, I hope that you see what makes the second superior to the first is the value add available from Azure. In both diagrams we are still moving data from "source" to "destination", but what we have with Azure is an infrastructure built for events (i.e. when a row or file is added or modified in a source), near real time data ingestion, unstructured data, and data science. In my thinking, if Azure doesn't give us added value, then why bother. A strict 1:1 "traditional" vs "Azure" data architecture would look something like this (blue boxes only) --> Figure 2 - Traditional Components Aligned with Azure Components It is the "white space" showing in Figure 2 that gives us the added value for an Azure Data Architecture. A diagram that is not from Azure Data Week, but I sometime adapt to explain how to move from "traditional" to "Azure" data architectures is Figure 3. It really is the exact same story as Figure 2, but I've stacked "traditional" and "Azure" in the same diagram. Figure 3 - Traditional Components Aligned with Azure Components (Second Perspective) Tips for Migration: Having worked with SQL Server and data warehouses since 1999 (Microsoft tool stack specifically), I am well aware of the creative solutions to get "near real time" from a SQL Agent job into an on-prem SQL Server, or to query large data sets effectively with column store indexing. For the sake of argument, let's say that nothing is impossible in either architecture. The point I'm trying to make here, however, is rather simple:

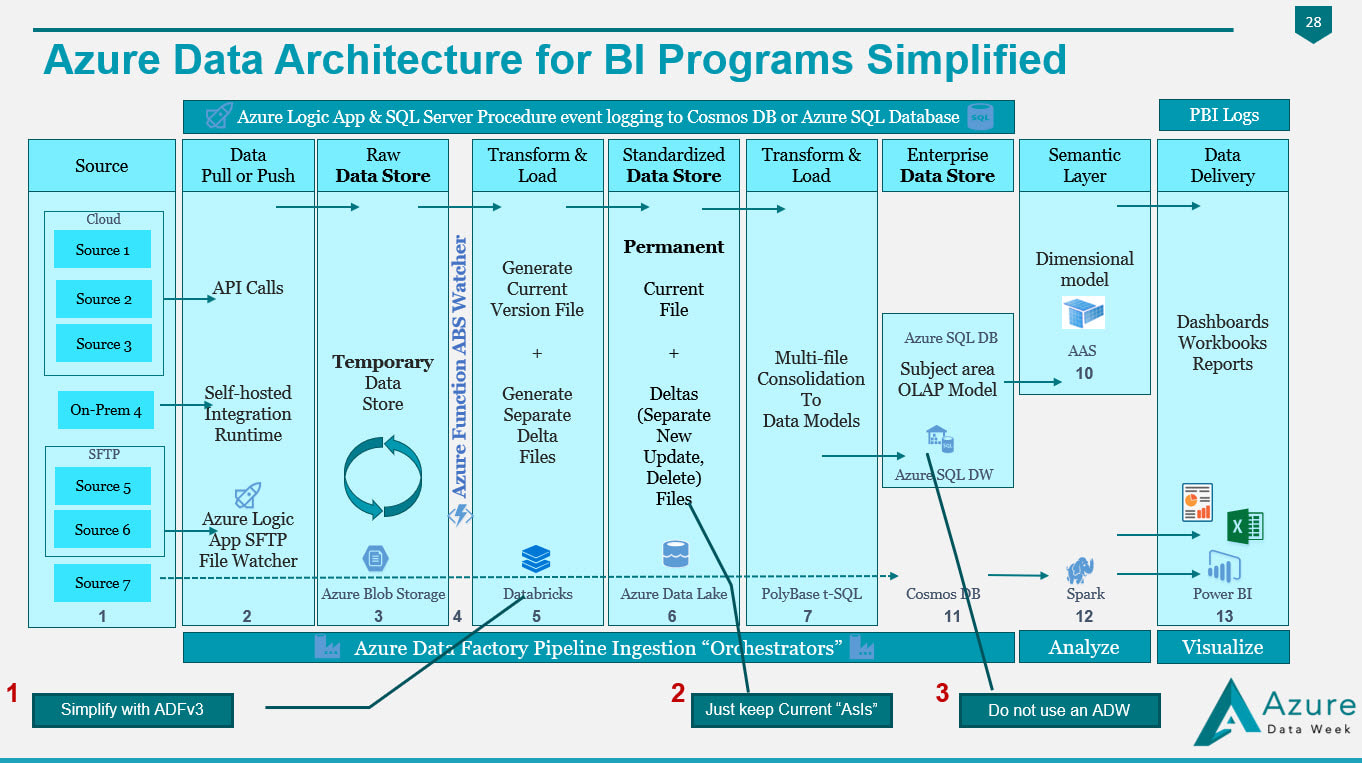

Figure 4 In Figure 4, we have made the following substitutions to simplify migration:

1. We have selected Azure Data Factory version 3 to replace the Python of Databricks or the PySpark of HDInsight. 2. We have removed the change data capture files in Azure Data Lake and are keeping simple "is most recent" files. 3. Unless you have data volumes to justify a data warehouse, which should have a minimum of 1 million rows for each of its 60 partitions, go with an Azure Database! You'll avoid the many creative solutions that Azure Data Warehouse requires to offset its unsupported table features, and stored procedures limitations Every company I work with has a different motivation for moving to Azure, and I'm surely not trying to put you into my box. The diagrams shared on my blob site change with every engagement, as no two companies have the same needs and business goals. Please allow me to encourage you to start thinking as to what your Azure Data Architecture might look like, and what your true value add talking points for a migration to Azure might be. Moving onward and upward, my technical friend, ~Delora Bradish p.s. If you have arrived at the blog post looking for a Database vs Data Warehouse, or Multidimensional vs Tabular discussion, those really are not Azure discussion points as much as they are data volume discussion points; consequently, I did not blog about these talking points here. Please contact me via www.pragmaticworks.com to schedule on site working sessions in either area. |

|||||||||||||||

| Microsoft Data & AI | All Things Azure |

RSS Feed

RSS Feed