|

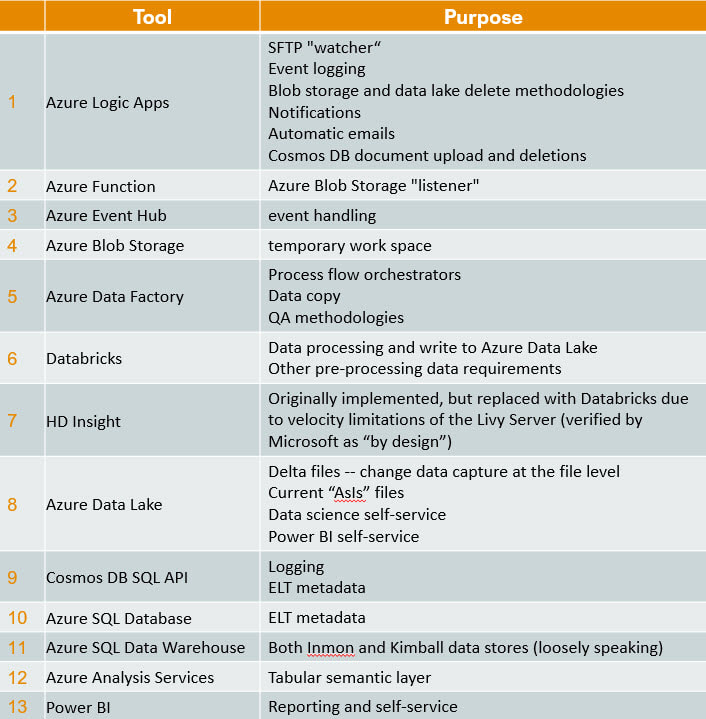

This is my second attempt to articulate lessons learned from a recent global Azure implementation for data and analytics. My first blog post became project management centered. You can find project management tips here. While writing it, it became apparent to me that the tool stack isn't the most important thing. I'm pretty hard-core Microsoft, but in the end, success was determined by how well we coached and trained the team -- not what field we played on. Turning my attention in this blog post to technical success points, please allow me to start off by saying that with over twenty countries dropping data into a shared Azure Data Lake, my use of "global" (above) is no exaggeration. I am truly not making this all up by compiling theories from Microsoft Docs. Second, the most frequent question people ask me is "what tools did you use?", because migrating from on-prem, Microsoft SSIS or Informatica to the cloud can feel like jumping off the high dive at the community pool for the first time. Consequently, I'm going to provide the tool stack list right out of the gate. You can find a supporting diagram for this data architecture here. Microsoft Azure Tool Stack for Data & Analytics Hold on, your eyes have already skipped to the list, but before you make an "every man for himself" move and bolt out of the Microsoft community pool, please read my migration blog post. There are options! For example, I just stood up an Azure for Data & Analytics solution that has no logic apps, Azure function, event hub, blob storage, databricks, HDI, or data lake. The solution is not event-driven and takes an ELT (extract, load, and then transform) approach. It reads from sources via Azure Data Factory and writes to an Azure Database logging the ELT activities in an Azure Database as well. Now, how simple is that? Kindly, you don't have to build the Taj MaSolution to be successful. You do have to fully understand your customer's reporting and analysis requirements, and who will be maintaining the solution on a long-term basis. If you still wish to swan dive into the pool, here's the list! Disclaimer: This blog post will not address Analysis Services or Power BI as these are about data delivery and my focus today is data ingestion. Technical Critical Success Points (CSP) Every single line item above has CSPs. How long do you want to hang out with me reading? I'm with you! Consequently, here are my top three CSP areas. Azure Data Factory (ADF)

Azure Data Lake (ADL)

Data Driven Ingestion Methodology

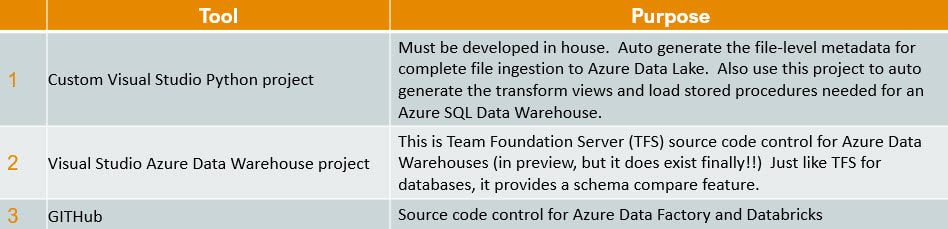

Wrapping It Up Supporting Applications It is only honest to share three supporting applications that help to make all of this possible. Link to creating a Python project in Visual Studio. Link to Azure SQL Data Warehouse Data Tools (Schema Compare) preview information. At the writing of this post, it still has to be requested from Microsoft and direct feedback to Microsoft is expected. Link to integrate Azure Data Factory with GitHub. Link to integrate Databricks with GitHub. Sample Files Below are example of data driven metadata and environment properties. Both are in JSON format.

A Bit of Humor Before You Go

Just for fun, here are some of my favorite sayings in no certain order

1 Comment

2/29/2024 09:05:04 pm

Thank you for sharing the course details in this blog post. Your content is very informative and unique. ive and unique. ice and unique.

Reply

Leave a Reply. |

|||||||||||||||

| Microsoft Data & AI | All Things Azure |

RSS Feed

RSS Feed