Microsoft has built in multiple data flow performance features that you can read about here (http://msdn.microsoft.com/en-us/library/ms141031.aspx). The following list is not all-inclusive, but the following best practices will help you to avoid the majority of common SSIS oversights and mistakes. 1. Give your SSIS process its own server. The resources needed for data integration, primary memory and lots of it, are different than for data storage. Granted, if your entire ETL process runs in just a few hours during the night when no end users are connecting, the case can be made to share servers; however, more often, real-time requirements and on-demand transformation are reasons to give your ETL process dedicated hardware. 2. Only update rows in your data warehouse that have been changed or deleted from your source system(s).

3. Install and test for adequate RAM on the SSIS server. Sorting 2TB of data requires 2TB of RAM, and SSIS will start to write the data to disc when all available memory is taken. As part of your Test / QA methodology, you should use Performance Monitor and have your network team notify you whenever Buffers Spooled goes above zero or Avg. Disk sec/Transfer gets above 10. Test and monitor the following PerfMon counters.

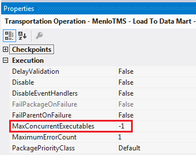

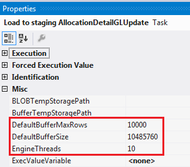

4. Take note of your MaxConcurrentExecutables package property. This defines how many tasks can run simultaneously. The default value is negative one (-1), which means the number of physical or logical processors plus two (2) is the number of control flow items that can be executed in parallel. It is generally recommended that you leave this setting at the default unless you are absolutely sure that parallelism is causing an issue.  5. Adequately test and update data flow properties that impact performance.

0 Comments

1. A good SSIS package design will be repeatable. If you find yourself adding new tasks and data flow exceptions to your packages, you need to stop and reevaluate the original layout. One SSIS project will have several “templates” that are reused without a single design change for the life of the data warehouse. You should only have one or two template variations for each of these areas:

2. Plan for restartability. As of SQL 2014, SSIS checkpoint files still did not work with sequence containers. (The whole sequence container will restart including successfully completed tasks.) The solution is to build Restartability into your ABC framework. 3. Verify your ETL process. Just because your ETL finished without error – or you successfully handled your errors, doesn’t necessarily mean the SUM() of SourceSystemNet equals the SUM() of SSAScubeNet. Use your verification process this way. a. It should be the final step of your ETL / ELT process b. It should confirm that strategic SUM() and row COUNT() are accurate c. It should report on dropped rows discarded during the ETL from an INNER JOIN or WHERE clause d. It should automatically send emails of errors that have been allowed to “report and pass”. 4. Collect Metadata! Audit, balance and control (ABC) should be planned for and implemented from the very first day. You should store this ABC data in a separate SQL Server database, and at any point in time be able to determine the following:

5. Trap for Errors both through On Error events and through precedence constraints. There are two types of errors to successfully handle in an ETL / ELT process

For a full list of MS BI Best practices, download the following file:

|

|||||||

| Microsoft Data & AI | SQL Server Integration Services |

RSS Feed

RSS Feed